Human-Computer Interaction (Q4 2024 - Q1 2025)

Introduction

Human-Computer Interaction (HCI) and Human-Robot Interaction (HRI) are rapidly evolving fields focused

on creating seamless, effective, and intuitive interactions between humans and technology. Current research,

supported by significant funding initiatives totaling approximately $29.7M across recent projects, emphasizes

moving beyond controlled laboratory settings into complex, real-world applications such as manufacturing,

construction, healthcare, assistive technology, and personal robotics. There is a strong push towards devel-

oping systems that are not just functional but also adaptive, personalized, and context-aware. Key themes

include: designing robots and interfaces that account for human factors, cognitive states, and user values;

leveraging multimodal sensing (vision, haptics, physiological signals) and immersive technologies like Mixed

Reality (MR) and Virtual Reality (VR) to enhance perception, understanding, and collaboration; devel-

oping AI and machine learning techniques for robots and agents to better interpret human actions (like

hand gestures), predict intent, and learn from observation or interaction; enabling effective human-agent

teaming, particularly in safety-critical or labor-intensive domains; improving accessibility through novel in-

terfaces (e.g., advanced haptics, brain-computer interfaces, context-aware single-switch systems) for diverse

user populations, including those with disabilities; and building robust cyberinfrastructure, including edge

computing, to support real-time interaction. The field is increasingly interdisciplinary, integrating insights

from computer science, engineering, design, psychology, and cognitive science to address the multifaceted

challenges of human-technology partnerships.

Pressing Questions and Promising Directions

The field of Human-Computer Interaction faces several critical questions that will shape its future development. For each pressing question, we explore multiple promising directions for research and innovation.

Question 1

How can we develop computational models and algorithms that enable robots and AI systems to accurately

and robustly infer human intentions, cognitive states (e.g., engagement, workload, trust), and affective

states in real-time from multimodal sensory data in dynamic environments?

Promising Directions:

Deep Learning for Multimodal Fusion

Developing deep learning models for multimodal fusion of behavioral cues (gaze, posture, gestures) and physiological

signals (EEG, ECG, EDA).

Recommended Faculty:

- Jiajun Wu (Stanford University)

- Boyuan Chen (Duke University)

- Matei Ciocarlie (Columbia University)

- Henny Admoni (Carnegie Mellon)

- Chen Feng (New York University)

- Sandeep Chinchali (University of Texas at Austin)

- Jitendra Malik (Berkeley)

Real-time Cognitive Analysis

Investigating methods for real-time cognitive load estimation and engagement tracking.

Recommended Faculty:

- Henny Admoni (Carnegie Mellon)

- Bilge Mutlu (University of Wisconsin Madison)

- Chien-Ming Huang (Johns Hopkins University)

- Joydeep Biswas (University of Texas at Austin)

- Jiajun Wu (Stanford University)

- Boyuan Chen (Duke University)

- Andrea Bajcsy (Carnegie Mellon)

Explainable AI Systems

Creating explainable AI (XAI) techniques to make system inferences about human states transparent.

Recommended Faculty:

- Henny Admoni (Carnegie Mellon)

- Bilge Mutlu (University of Wisconsin Madison)

- Chien-Ming Huang (Johns Hopkins University)

- Joydeep Biswas (University of Texas at Austin)

- Andrea Bajcsy (Carnegie Mellon)

- Jiajun Wu (Stanford University)

- Carl Vondrick (Columbia University)

Predictive Behavioral Modeling

Building predictive models of human behavior based on interaction history and contextual information.

Recommended Faculty:

- Henny Admoni (Carnegie Mellon)

- Chien-Ming Huang (Johns Hopkins University)

- Bilge Mutlu (University of Wisconsin Madison)

- Andrea Bajcsy (Carnegie Mellon)

- Boyuan Chen (Duke University)

- Jiajun Wu (Stanford University)

- Joydeep Biswas (University of Texas at Austin)

Question 2

What are the most effective methods for achieving seamless and efficient co-adaptation between humans and

autonomous systems, allowing both the human and the system to learn from each other and improve collaborative

performance over time?

Promising Directions:

Reinforcement Learning for Human-Agent Collaboration

Exploring reinforcement learning algorithms where both human and agent rewards are considered.

Recommended Faculty:

- Matthew Gombolay (Georgia Institute of Technology)

- Anca Dragan (Berkeley)

- Pieter Abbeel (Berkeley)

- Sandeep Chinchali (University of Texas at Austin)

- Peter Stone (University of Texas at Austin)

- Erdem Biyik (University of Southern California)

- Guanya Shi (Carnegie Mellon)

Adaptive Interface Personalization

Developing adaptive interfaces that personalize system behavior based on inferred user skill and state.

Recommended Faculty:

- Bilge Mutlu (University of Wisconsin Madison)

- Chien-Ming Huang (Johns Hopkins University)

- Henny Admoni (Carnegie Mellon)

- Guanya Shi (Carnegie Mellon)

- Sandeep Chinchali (University of Texas at Austin)

- Zackory Erickson (Carnegie Mellon)

- Matthew Gombolay (Georgia Institute of Technology)

Computational Models of Mutual Learning

Investigating state-space models and computational approaches to capture the dynamics of mutual learning in interaction.

Recommended Faculty:

- Guanya Shi (Carnegie Mellon)

- Boyuan Chen (Duke University)

- Sandeep Chinchali (University of Texas at Austin)

- Masayoshi Tomizuka (Berkeley)

- Matthew Gombolay (Georgia Institute of Technology)

- Abhishek Gupta (University of Washington)

- Koushil Sreenath (Berkeley)

Dynamic Shared Control

Designing shared control paradigms that dynamically arbitrate autonomy between human and robot based on task context and user preference.

Recommended Faculty:

- Guanya Shi (Carnegie Mellon)

- Henny Admoni (Carnegie Mellon)

- Zackory Erickson (Carnegie Mellon)

- Bilge Mutlu (University of Wisconsin Madison)

- Sandeep Chinchali (University of Texas at Austin)

- Chien-Ming Huang (Johns Hopkins University)

- Shuran Song (Stanford University)

Question 3

How can we design and implement high-fidelity, intuitive multimodal interfaces, particularly incorporating rich haptic feedback, that effectively convey complex information, enable dexterous manipulation, and enhance situational awareness across diverse applications (e.g., teleoperation, VR/MR, assistive devices)?

Promising Directions:

Advanced Haptic Rendering

Creating novel haptic rendering algorithms for realistic texture, shape, and force feedback.

Recommended Faculty:

- Heather Culbertson (University of Southern California)

- Oussama Khatib (Stanford University)

- Masayoshi Tomizuka (Berkeley)

- Roland Siegwart (ETH Zurich)

- Wendy Ju (Cornell University)

- Allison M Okamura (Stanford University)

- Jitendra Malik (Berkeley)

Semantic Haptic Mapping

Developing data-driven methods to map semantic meaning to vibrotactile or other haptic signals.

Recommended Faculty:

- Heather Culbertson (University of Southern California)

- Wendy Ju (Cornell University)

- Matei Ciocarlie (Columbia University)

- Oussama Khatib (Stanford University)

- Etienne Burdet (Imperial College London)

- Jeannette Bohg (Stanford University)

- Roland Siegwart (ETH Zurich)

Immersive Haptic Systems

Designing integrated MR/VR systems with co-located haptic feedback for immersive training and telepresence.

Recommended Faculty:

- Heather Culbertson (University of Southern California)

- Roland Siegwart (ETH Zurich)

- Matei Ciocarlie (Columbia University)

- Wendy Ju (Cornell University)

- Masayoshi Tomizuka (Berkeley)

- Oussama Khatib (Stanford University)

- Jitendra Malik (Berkeley)

Multi-sensory Integration

Investigating multi-sensory integration models (visual, auditory, haptic) for interface design.

Recommended Faculty:

- Wendy Ju (Cornell University)

- Etienne Burdet (Imperial College London)

- Heather Culbertson (University of Southern California)

- Roland Siegwart (ETH Zurich)

- Matei Ciocarlie (Columbia University)

- Yiannis Aloimonos (University of Maryland, College Park)

- Jiajun Wu (Stanford University)

Accessible Multi-modal Interfaces

Developing hardware and software for accessible multi-modal data representation (e.g., tactile graphics, sonification).

Recommended Faculty:

- Wendy Ju (Cornell University)

- Roland Siegwart (ETH Zurich)

- Heather Culbertson (University of Southern California)

- Matei Ciocarlie (Columbia University)

- Etienne Burdet (Imperial College London)

- Shuran Song (Stanford University)

- Jitendra Malik (Berkeley)

Question 4

How can robots and AI systems learn complex interaction skills, including manipulation and collaboration strategies, efficiently and safely from limited human demonstrations, observations, or interactions, especially in unstructured and unpredictable settings?

Promising Directions:

Advanced Imitation Learning

Advancing imitation learning and learning from observation techniques, particularly for fine-grained manipulation tasks (e.g., understanding hand-object interactions from video).

Recommended Faculty:

- Dorsa Sadigh (Stanford University)

- Animesh Garg (Georgia Institute of Technology)

- Jeannette Bohg (Stanford University)

- Bilge Mutlu (University of Wisconsin Madison)

- Shuran Song (Stanford University)

- Dieter Fox (University of Washington)

- Stelian Coros (ETH Zurich)

Rapid Task Adaptation

Developing few-shot and zero-shot learning methods for rapid adaptation to new tasks or users.

Recommended Faculty:

- Dorsa Sadigh (Stanford University)

- Bilge Mutlu (University of Wisconsin Madison)

- Animesh Garg (Georgia Institute of Technology)

- Dieter Fox (University of Washington)

- Chelsea Finn (Stanford University)

- Pieter Abbeel (Berkeley)

- Jeannette Bohg (Stanford University)

Simulation-to-Real Transfer

Utilizing simulation-to-real transfer techniques to train interaction policies safely and efficiently.

Recommended Faculty:

- Dorsa Sadigh (Stanford University)

- Animesh Garg (Georgia Institute of Technology)

- Dieter Fox (University of Washington)

- Andrea Bajcsy (Carnegie Mellon)

- Bilge Mutlu (University of Wisconsin Madison)

- Jeannette Bohg (Stanford University)

- Pieter Abbeel (Berkeley)

Interactive Learning Systems

Exploring interactive machine learning approaches where systems can actively query humans for clarification or feedback.

Recommended Faculty:

- Bilge Mutlu (University of Wisconsin Madison)

- Dorsa Sadigh (Stanford University)

- Henny Admoni (Carnegie Mellon)

- Chien-Ming Huang (Johns Hopkins University)

- Matthew Gombolay (Georgia Institute of Technology)

- Animesh Garg (Georgia Institute of Technology)

- Zackory Erickson (Carnegie Mellon)

Large Model Integration

Leveraging large language and vision models to provide contextual understanding and common sense reasoning for interaction.

Recommended Faculty:

- Dorsa Sadigh (Stanford University)

- Bilge Mutlu (University of Wisconsin Madison)

- Shuran Song (Stanford University)

- Matthew Gombolay (Georgia Institute of Technology)

- Dieter Fox (University of Washington)

- Henny Admoni (Carnegie Mellon)

- Chelsea Finn (Stanford University)

Question 5

What computational frameworks and control strategies are needed to ensure robust, safe, and predictable HRI/HCI performance in physically interactive scenarios within complex, dynamic environments where unexpected events and human variability are common?

Promising Directions:

Adaptive Planning and Control

Developing adaptive planning and control algorithms that explicitly model uncertainty in human behavior and the environment.

Recommended Faculty:

- Andrea Bajcsy (Carnegie Mellon)

- Hod Lipson (Columbia University)

- Panagiotis Tsiotras (Georgia Institute of Technology)

- Wendy Ju (Cornell University)

- Guanya Shi (Carnegie Mellon)

- Matthew Gombolay (Georgia Institute of Technology)

- Henny Admoni (Carnegie Mellon)

Safety Verification Methods

Creating formal methods for verifying the safety and reliability of collaborative robotic systems.

Recommended Faculty:

- Andrea Bajcsy (Carnegie Mellon)

- Chien-Ming Huang (Johns Hopkins University)

- Stelian Coros (ETH Zurich)

- Roland Siegwart (ETH Zurich)

- Henny Admoni (Carnegie Mellon)

- Bilge Mutlu (University of Wisconsin Madison)

- Shuran Song (Stanford University)

Resilient Perception Systems

Designing resilient perception systems that can handle sensor noise, occlusions, and environmental changes.

Recommended Faculty:

- Miroslav Pajic (Duke University)

- Wendy Ju (Cornell University)

- Farshad Khorrami (New York University)

- Masayoshi Tomizuka (Berkeley)

- Andrea Bajcsy (Carnegie Mellon)

- Chien-Ming Huang (Johns Hopkins University)

- Jiajun Wu (Stanford University)

Real-time Edge Computing

Investigating edge computing architectures to minimize latency for real-time safety-critical control loops in HRI.

Recommended Faculty:

- Wendy Ju (Cornell University)

- Xu Chen (University of Washington)

- Boyuan Chen (Duke University)

- Farshad Khorrami (New York University)

- Jiajun Wu (Stanford University)

- Andrea Bajcsy (Carnegie Mellon)

- Masayoshi Tomizuka (Berkeley)

Dynamic Risk Assessment

Developing methods for online risk assessment and mitigation during physical human-robot collaboration.

Recommended Faculty:

- Chien-Ming Huang (Johns Hopkins University)

- Andrea Bajcsy (Carnegie Mellon)

- Henny Admoni (Carnegie Mellon)

- Guanya Shi (Carnegie Mellon)

- Wendy Ju (Cornell University)

- Bilge Mutlu (University of Wisconsin Madison)

- Farshad Khorrami (New York University)

Key Figures and Highlighted Publications

This section provides a deeper analysis of the leading researchers driving progress in the field. We identify key faculty

based on their historical contributions and evaluate their performance across several metrics relevant to this domain.

Alongside this, we highlight the most significant papers published during the analysis period, as determined by those

same evaluation criteria.

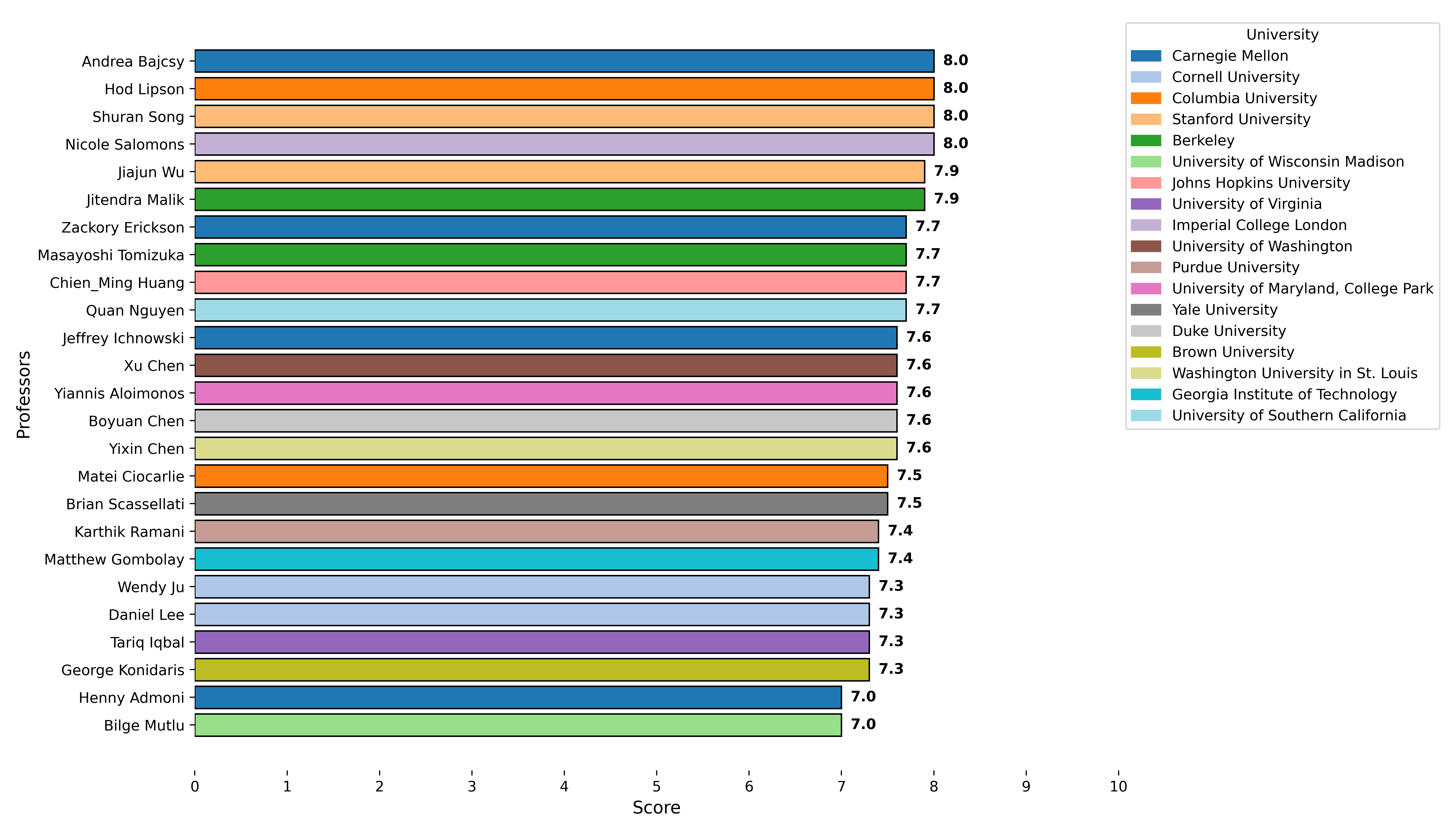

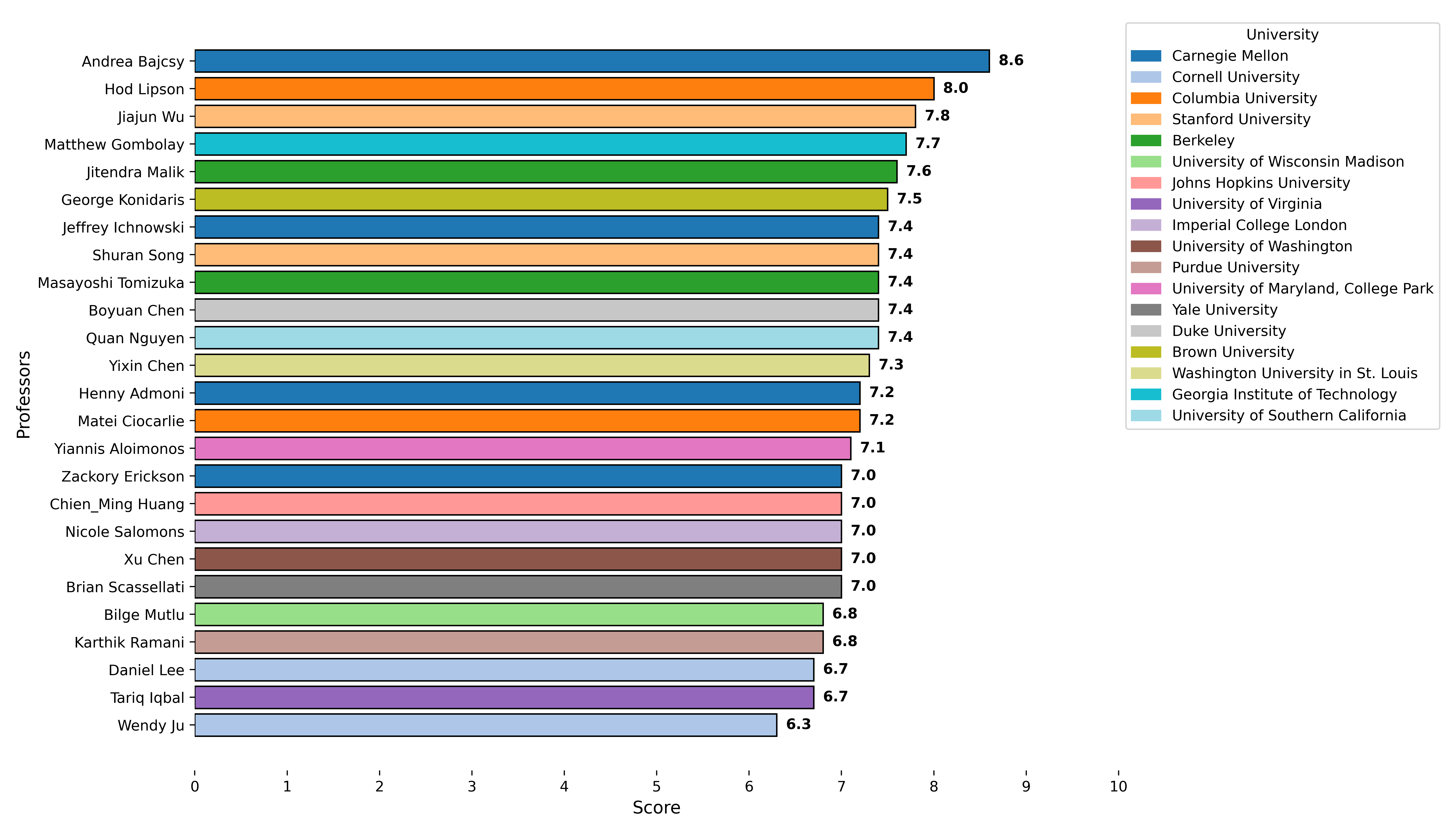

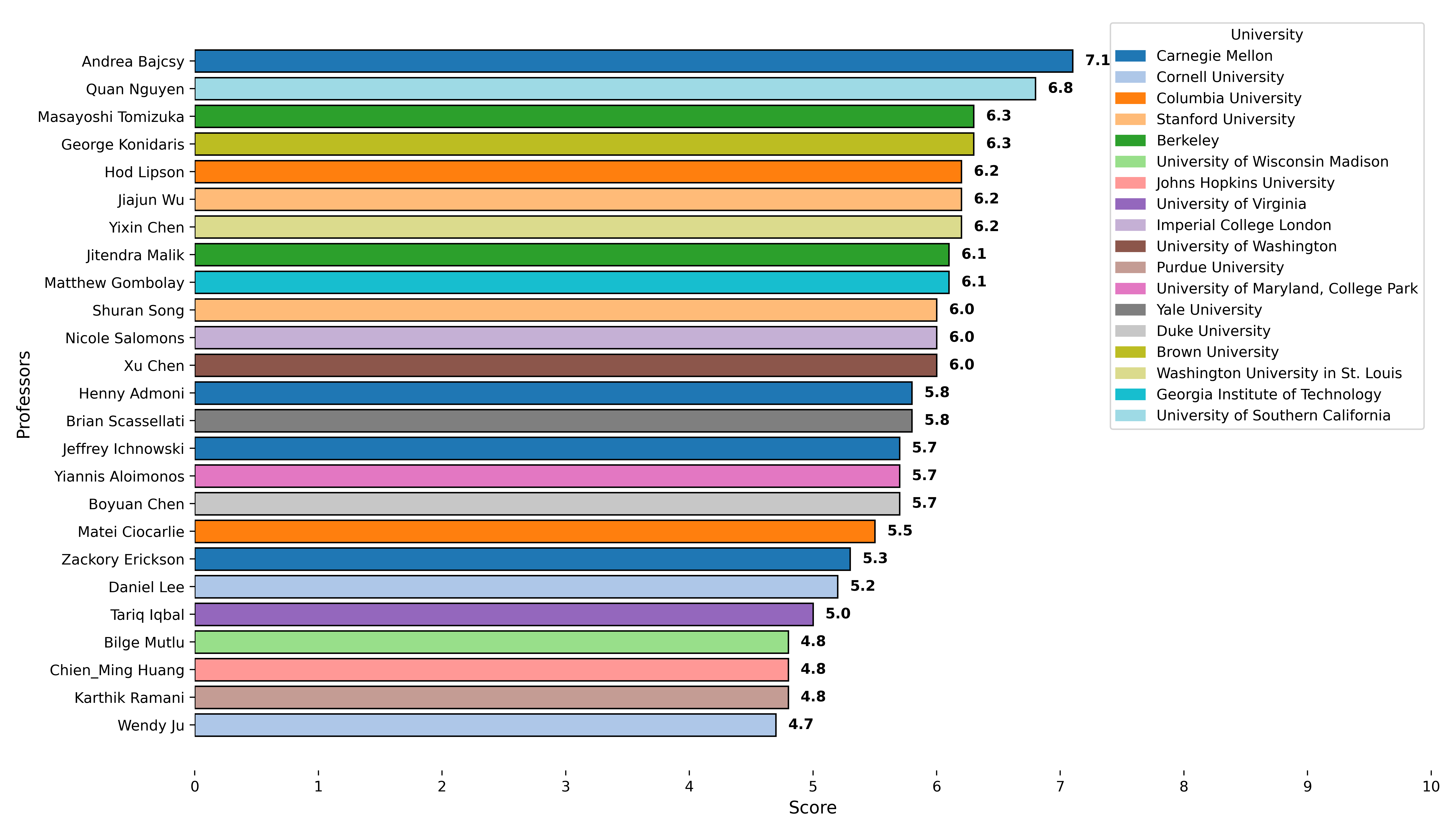

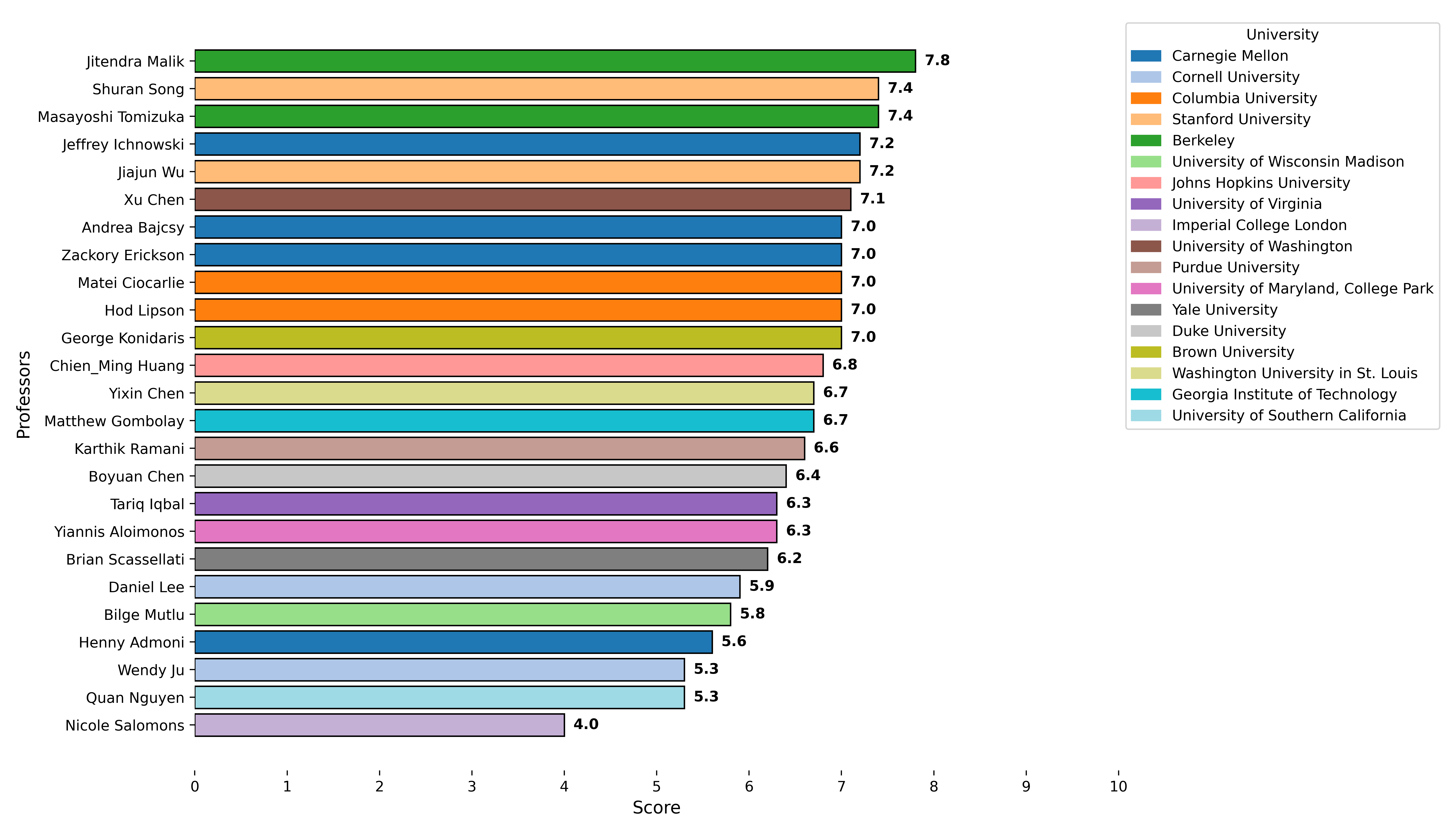

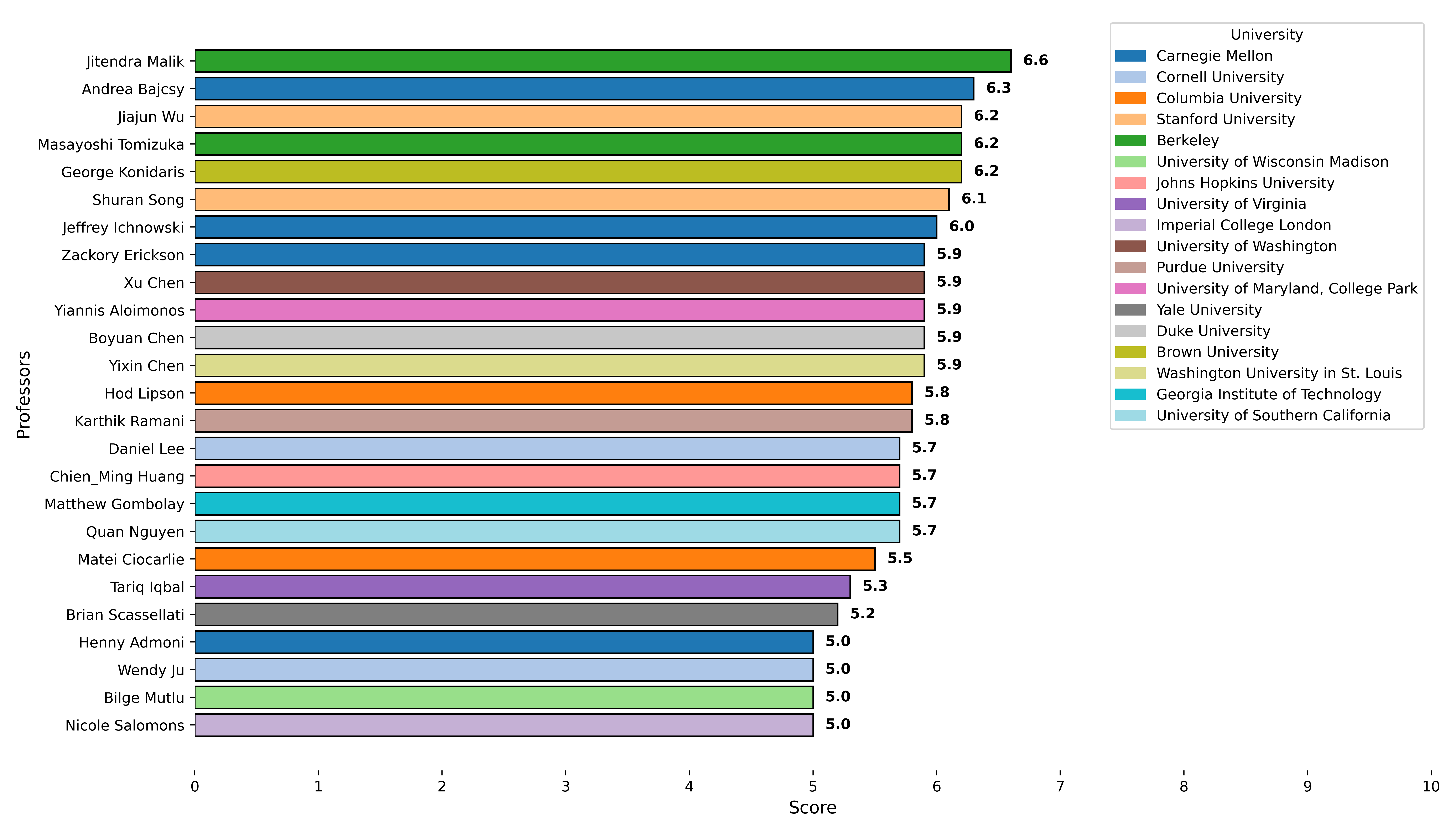

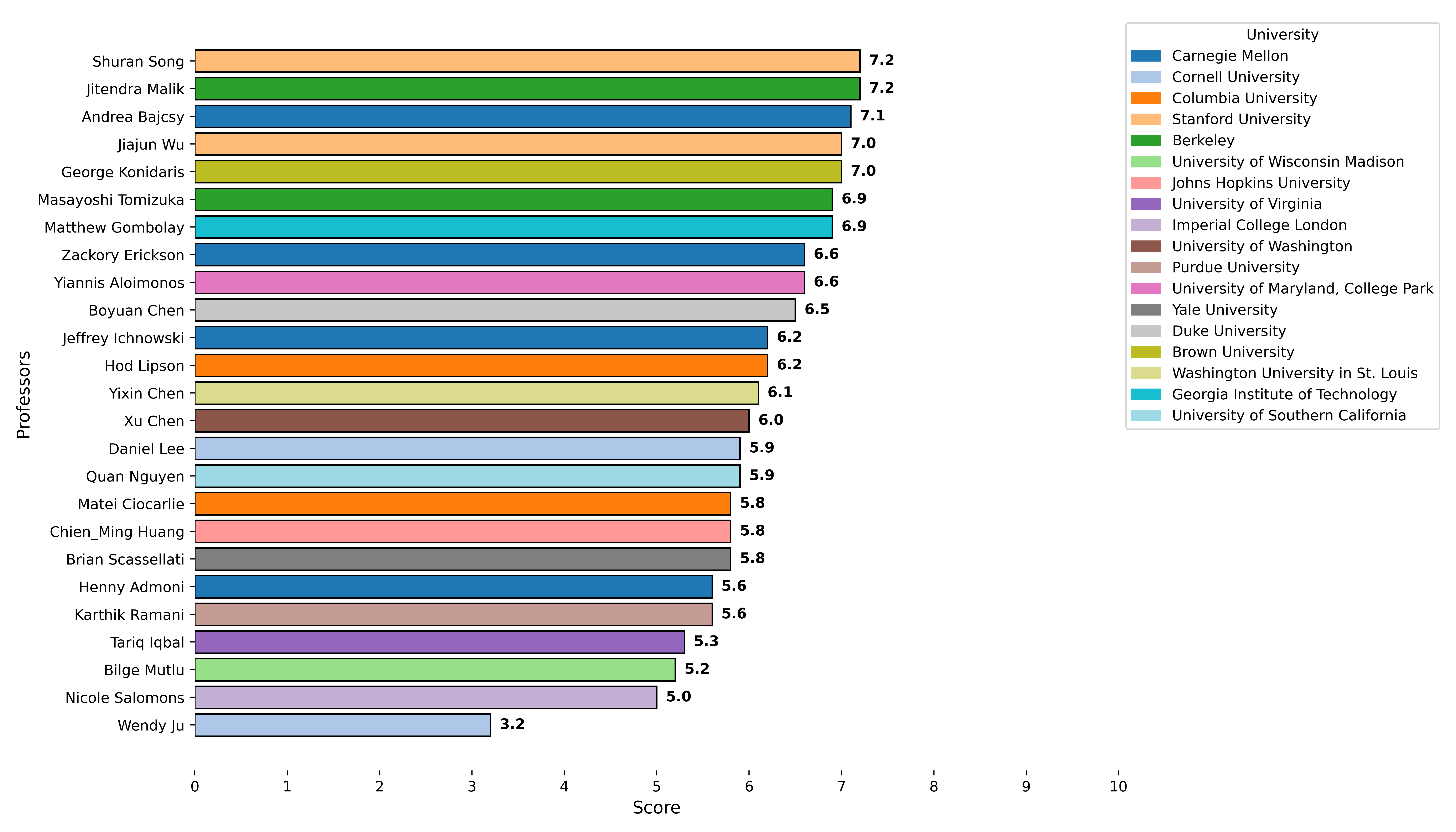

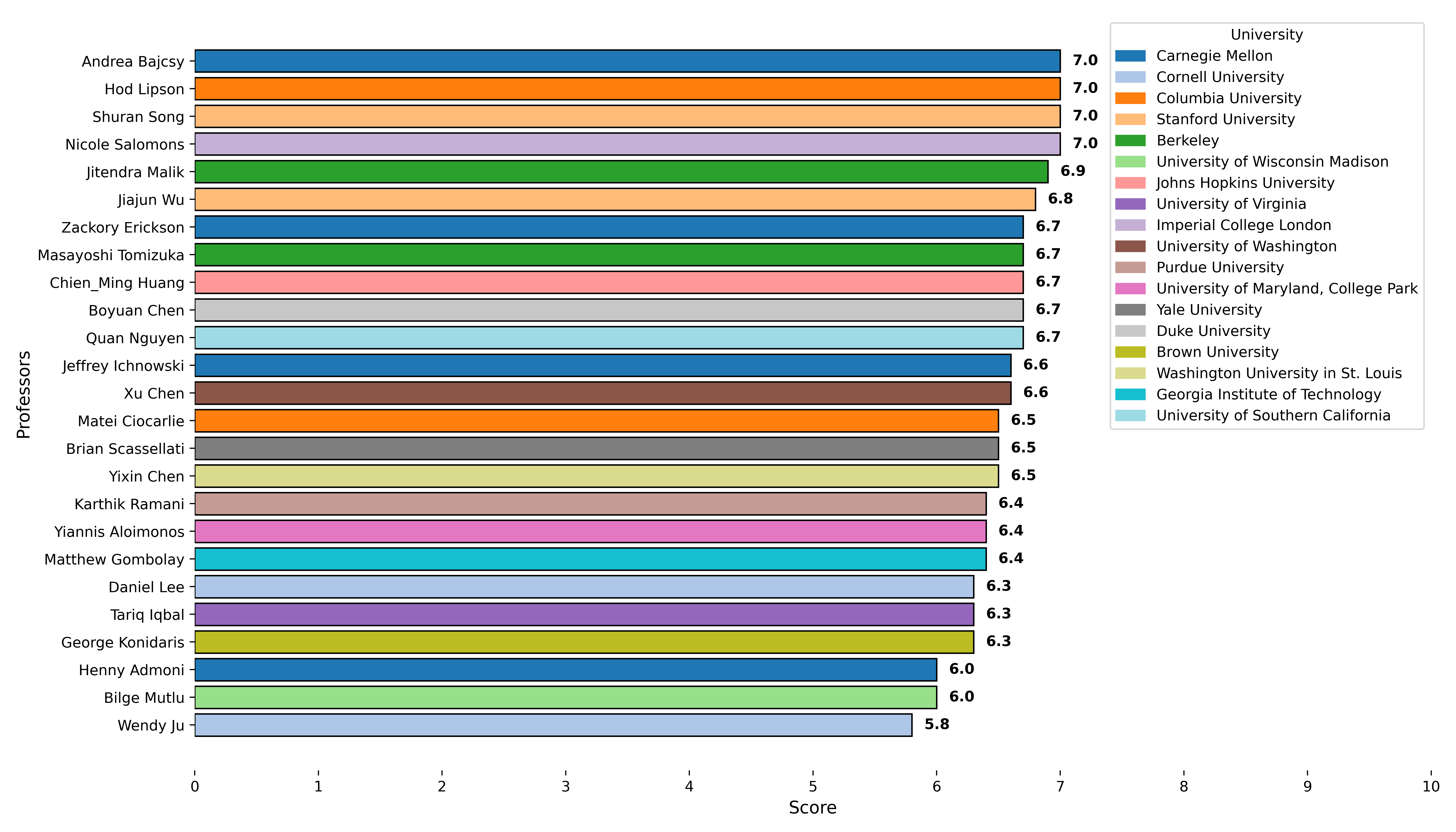

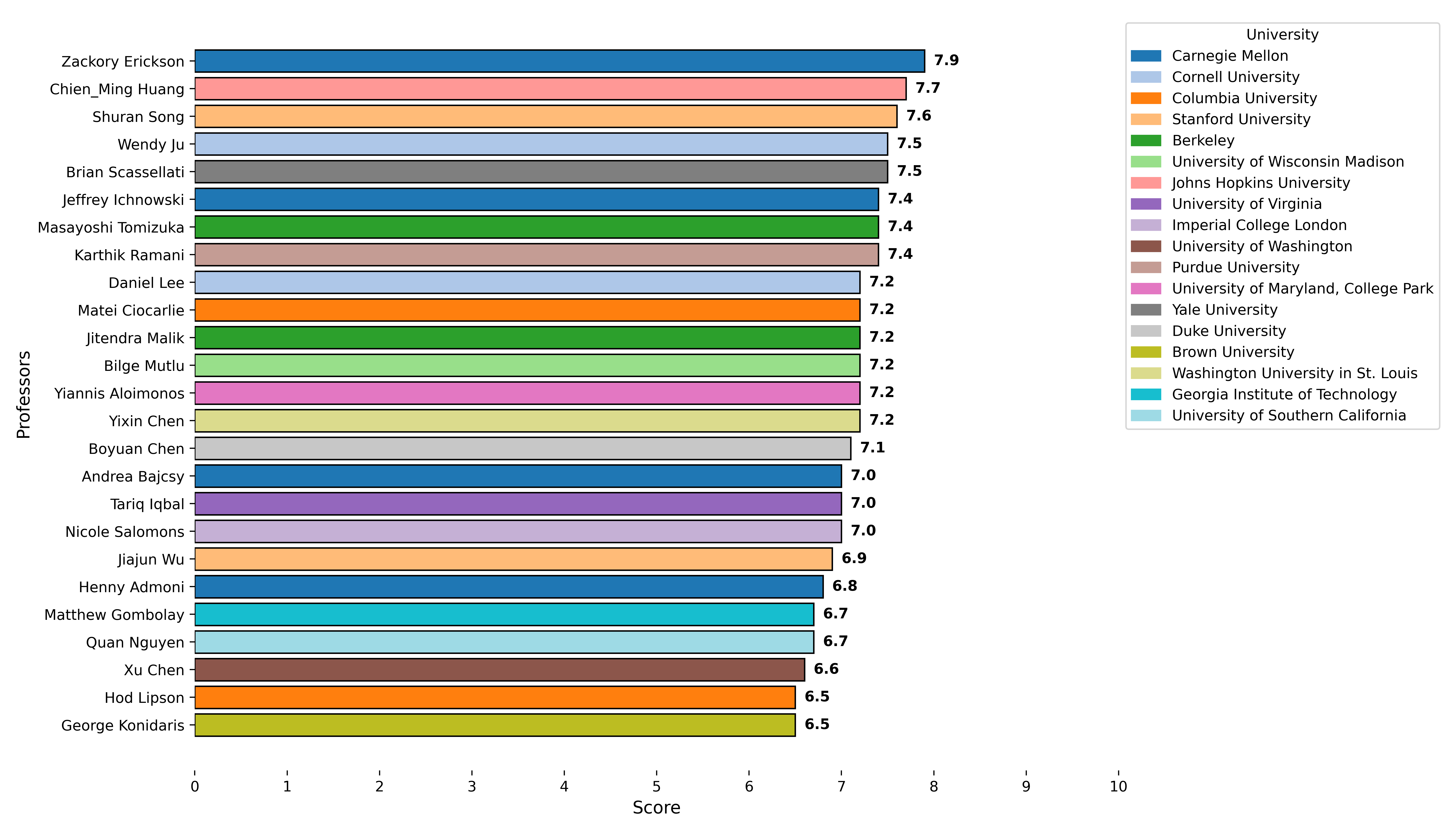

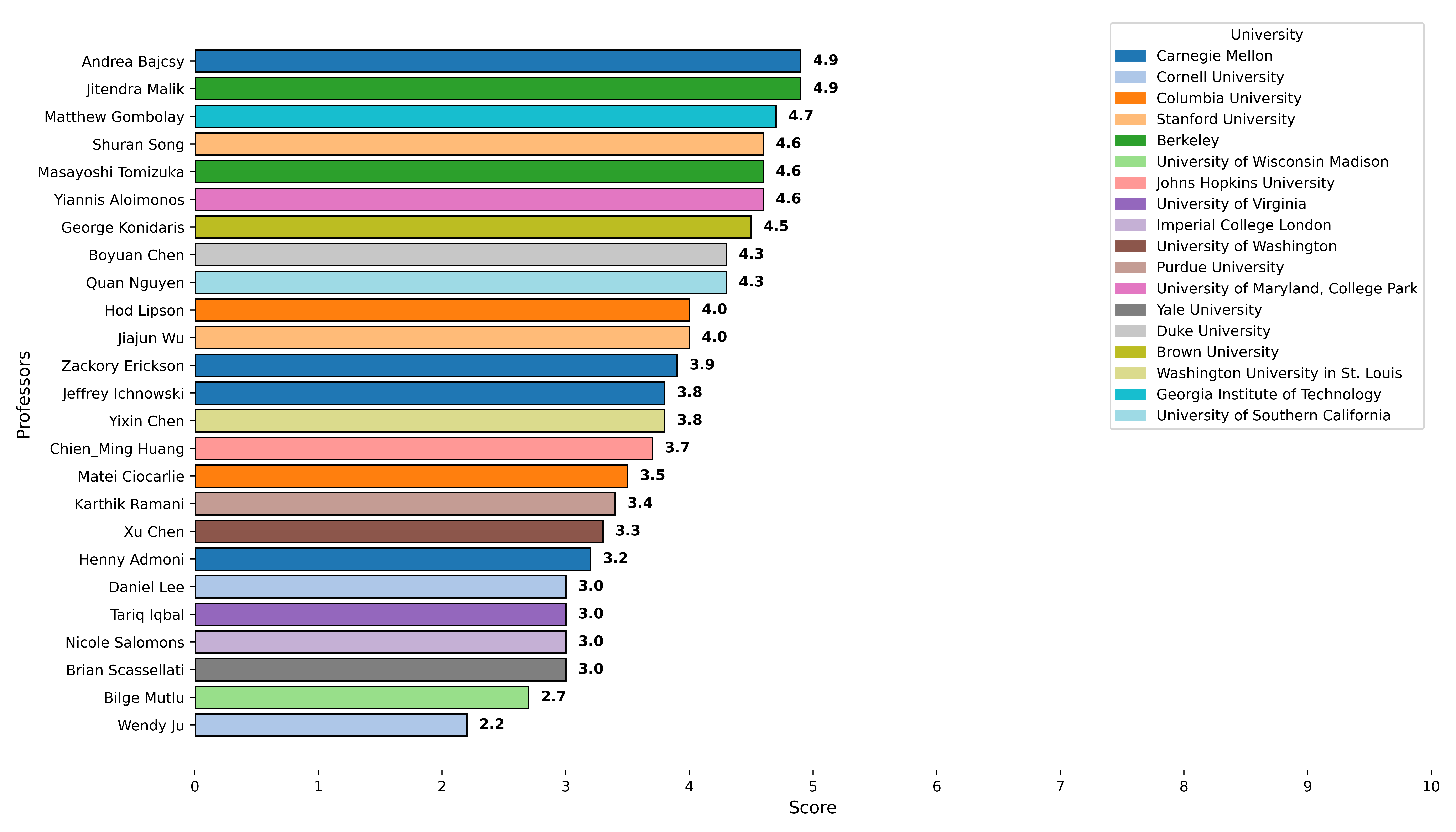

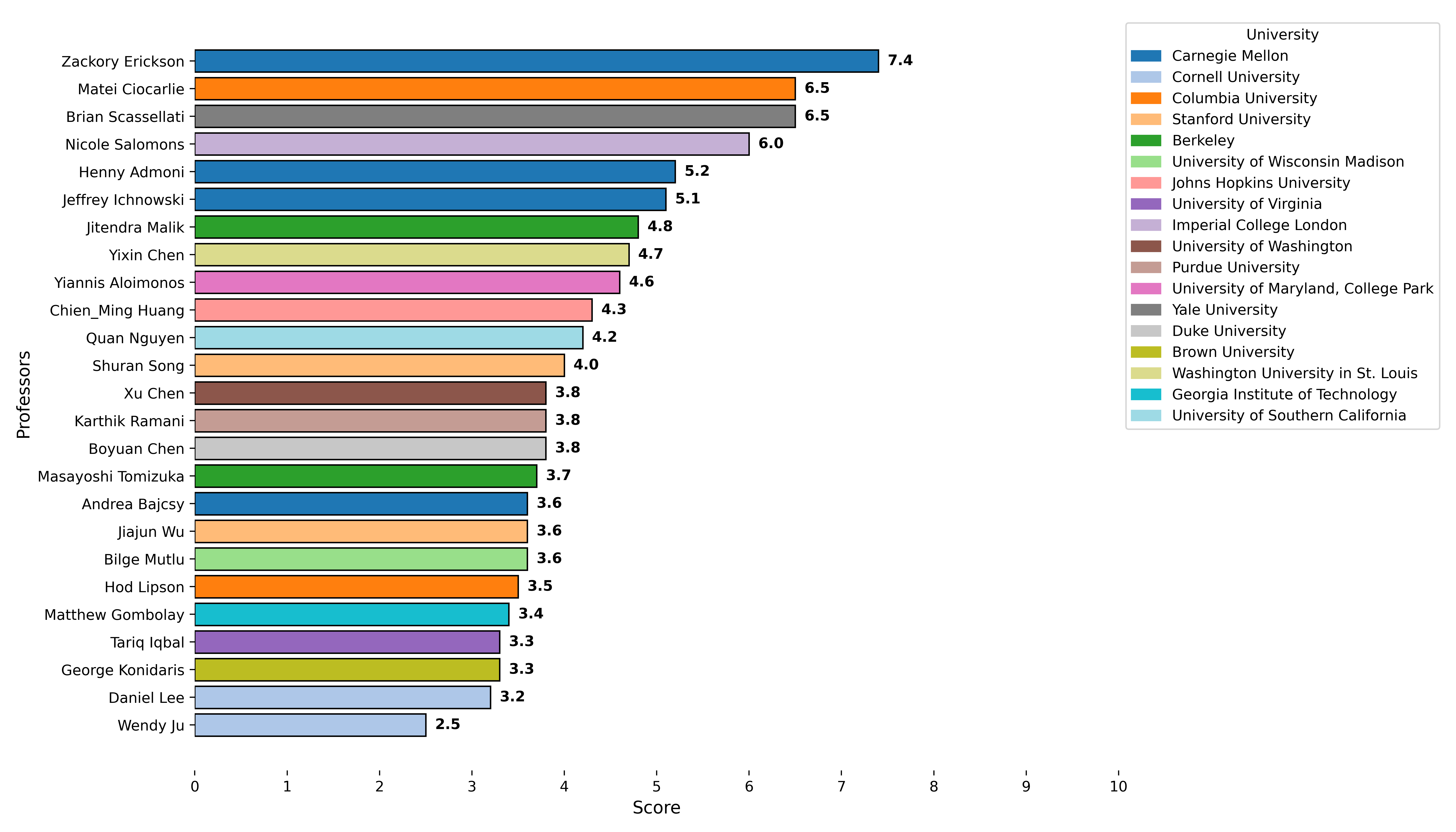

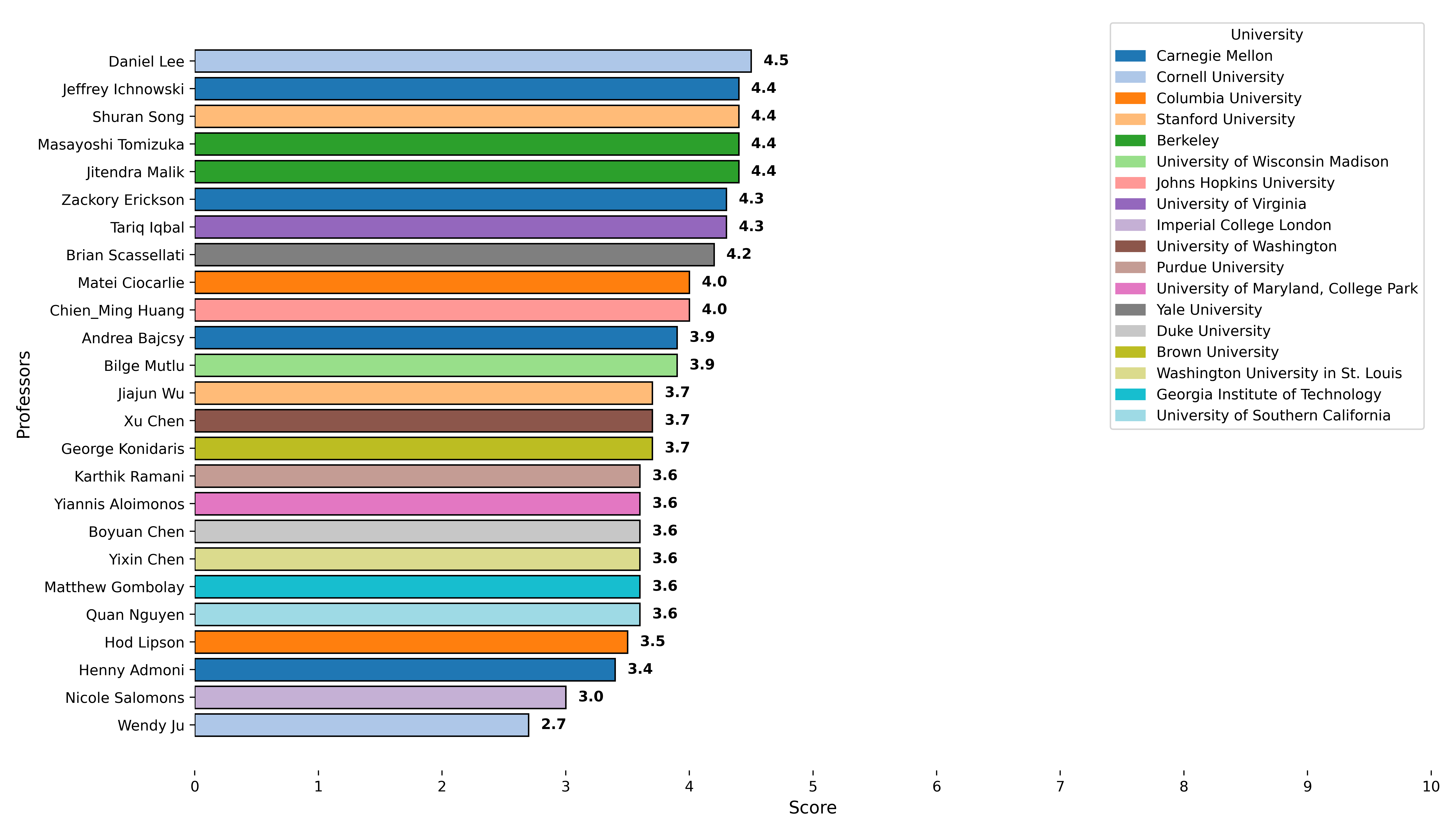

Bar Charts

The faculty featured in the bar charts are selected based on their historical track record in the field. Once key

contributors are identified, they are assessed across multiple evaluation categories to capture the breadth and depth

of their impact. To add another layer of insight, professors from the same institution are grouped by color in each

chart. This helps highlight universities that may have a concentration of influential figures in the field. That said,

the presence of more faculty from a given institution should not be interpreted as a definitive measure of overall

quality—quantity does not necessarily equate to excellence in a particular domain.

Highlighted Publications

The highlighted papers are curated independently from the charts. These selections reflect the most relevant and

important publications from the review period, as defined by the criteria for each evaluation category. The principal

investigators behind these papers may or may not appear in the bar charts. If a publication is featured but the PI is

not listed among the top faculty, this may indicate an emerging researcher or someone newly entering the field. As

such, we encourage attention to both the bar charts and the highlighted publications to get a comprehensive picture

of the key players and rising talent in the field.

Importance to the Field

Highlighted Publications

Papers that scored 8 or higher in this category during the review period.

Digitizing Touch with an Artificial Multimodal Fingertip (Score: 9.0/10)

Abstract: Touch is a crucial sensing modality that provides rich information about object properties and interactions with the physical environment. Humans and robots both benefit from using touch to perceive and interact with the surrounding environment (Johansson and Flanagan, 2009; Li et al., 2020; Calandra et al., 2017). However, no existing systems provide rich, multi-modal digital touch-sensing capabilities through a hemispherical compliant embodiment. Here, we describe several conceptual and technological innovations to improve the digitization of touch. These advances are embodied in an artificial finger-shaped sensor with advanced sensing capabilities. Significantly, this fingertip contains high-resolution sensors (8.3 million taxels) that respond to omnidirectional touch, capture multi-modal signals, and use on-device artificial intelligence to process the data in real time. Evaluations show that the artificial fingertip can resolve spatial features as small as 7 um, sense normal and shear forces with a resolution of 1.01 mN and 1.27 mN, respectively, perceive vibrations up to 10 kHz, sense heat, and even sense odor. Furthermore, it embeds an on-device AI neural network accelerator that acts as a peripheral nervous system on a robot and mimics the reflex arc found in humans. These results demonstrate the possibility of digitizing touch with superhuman performance. The implications are profound, and we anticipate potential applications in robotics (industrial, medical, agricultural, and consumer-level), virtual reality and telepresence, prosthetics, and e-commerce. Toward digitizing touch at scale, we open-source a modular platform to facilitate future research on the nature of touch.

Significance: The paper addresses a critical gap in AI and robotics: digitizing touch with rich, multi-modal sensing capabilities. The development of an artificial fingertip with superhuman performance has profound implications for various fields, including robotics, virtual reality, prosthetics, and e-commerce. The open-source nature of the platform further amplifies its importance by facilitating future research and development in the field. A potential pitfall is the complexity and cost associated with replicating the system, which might limit its accessibility to some researchers.

Authors:

- Mike Lambeta (Berkeley)

- Tingfan Wu (Berkeley)

- Ali Sengul (Berkeley)

- Victoria Rose Most (Berkeley)

- Nolan Black (Berkeley)

- Kevin Sawyer (Berkeley)

- Romeo Mercado (Berkeley)

- And others...

PI: Jitendra Malik (Berkeley)

Published: November 4, 2024

Social Group Human-Robot Interaction: A Scoping Review of Computational Challenges (Score: 8.0/10)

Abstract: Group interactions are a natural part of our daily life, and as robots become more integrated into society, they must be able to socially interact with multiple people at the same time. However, group human-robot interaction (HRI) poses unique computational challenges often overlooked in the current HRI literature. We conducted a scoping review including 44 group HRI papers from the last decade (2015-2024). From these papers, we extracted variables related to perception and behaviour generation challenges, as well as factors related to the environment, group, and robot capabilities that influence these challenges. Our findings show that key computational challenges in perception included detection of groups, engagement, and conversation information, while challenges in behaviour generation involved developing approaching and conversational behaviours. We also identified research gaps, such as improving detection of subgroups and interpersonal relationships, and recommended future work in group HRI to help researchers address these computational challenges.

Significance: The paper addresses a critical gap in HRI research by focusing on group interactions, which are more representative of real-world scenarios than dyadic interactions. The identification of computational challenges in perception and behavior generation, along with research gaps, provides a valuable roadmap for future research. However, the review's focus on specific venues might limit its scope.

Authors:

- Massimiliano Nigro (Imperial College London)

- Emmanuel Akinrintoyo (Imperial College London)

- Nicole Salomons (Imperial College London)

- Micol Spitale (Imperial College London)

PI: Nicole Salomons (Imperial College London)

Published: December 20, 2024

Student Reflections on Self-Initiated GenAI Use in HCI Education (Score: 8.0/10)

Abstract: Generative Artificial Intelligence's (GenAI) impact on Human-Computer Interaction (HCI) education and technology design is pervasive but poorly understood. This study examines how graduate students in an applied HCI course utilized GenAI tools across various stages of interactive device design. Although the course policy neither explicitly encouraged nor prohibited using GenAI, students independently integrated these tools into their work. Through conducting 12 post-class group interviews, we reveal the dual nature of GenAI: while it stimulates creativity and accelerates design iterations, it also raises concerns about shallow learning and over-reliance. Our findings indicate that GenAI's benefits are most pronounced in the Execution phase of the design process, particularly for rapid prototyping and ideation. In contrast, its use in the Discover phase and design reflection may compromise depth. This study underscores the complex role of GenAI in HCI education and offers recommendations for curriculum improvements to better prepare future designers for effectively integrating GenAI into their creative processes.

Significance: The research addresses a pressing question regarding the impact of GenAI on HCI education, a topic of significant current interest. The findings offer insights into how GenAI tools are being used by students and the potential benefits and risks associated with their use. The recommendations for curriculum improvements are valuable for educators. However, the study is limited to a single course, which may affect the broader applicability of the findings.

Authors:

- Hauke Sandhaus (Cornell University)

- Quiquan Gu (Cornell University)

- Maria Teresa Parreira (Cornell University)

- Wendy Ju (Cornell University)

PI: Wendy Ju (Cornell University)

Published: October 17, 2024

Towards Wearable Interfaces for Robotic Caregiving (Score: 8.0/10)

Abstract: Physically assistive robots in home environments can enhance the autonomy of individuals with impairments, allowing them to regain the ability to conduct self-care and household tasks. Individuals with physical limitations may find existing interfaces challenging to use, highlighting the need for novel interfaces that can effectively support them. In this work, we present insights on the design and evaluation of an active control wearable interface named HAT, Head-Worn Assistive Teleoperation. To tackle challenges in user workload while using such interfaces, we propose and evaluate a shared control algorithm named Driver Assistance. Finally, we introduce the concept of passive control, in which wearable interfaces detect implicit human signals to inform and guide robotic actions during caregiving tasks, with the aim of reducing user workload while potentially preserving the feeling of control.

Significance: The research addresses the important problem of providing assistive robotic solutions for individuals with impairments, which is a growing area of need. The focus on wearable interfaces and shared/passive control mechanisms directly tackles the challenges of user workload and control, which are critical for the adoption of such technologies. The in-home study with a user with quadriplegia highlights the potential impact. However, the limited number of participants in the studies is a limitation.

Authors:

- Akhil Padmanabha (Carnegie Mellon)

- Carmel Majidi (Carnegie Mellon)

- Zackory Erickson (Carnegie Mellon)

PI: Zackory Erickson (Carnegie Mellon)

Published: February 7, 2025

RoboCopilot: Human-in-the-loop Interactive Imitation Learning for Robot Manipulation (Score: 8.0/10)

Abstract: Learning from human demonstration is an effective approach for learning complex manipulation skills. However, existing approaches heavily focus on learning from passive human demonstration data for its simplicity in data collection. Interactive human teaching has appealing theoretical and practical properties, but they are not well supported by existing human-robot interfaces. This paper proposes a novel system that enables seamless control switching between human and an autonomous policy for bi-manual manipulation tasks, enabling more efficient learning of new tasks. This is achieved through a compliant, bilateral teleoperation system. Through simulation and hardware experiments, we demonstrate the value of our system in an interactive human teaching for learning complex bi-manual manipulation skills.

Significance: The research addresses the important problem of efficient robot learning from human demonstration, specifically focusing on interactive learning. Interactive learning has the potential to overcome limitations of passive imitation learning. The development of a system that facilitates seamless human-robot control switching is valuable. However, the reliance on human intervention could limit scalability.

Authors:

- Philipp Wu (Berkeley)

- Yide Shentu (Berkeley)

- Qiayuan Liao (Berkeley)

- Ding Jin (Berkeley)

- Menglong Guo (Berkeley)

- Koushil Sreenath (Berkeley)

- Xingyu Lin (Berkeley)

- Pieter Abbeel (Berkeley)

PI: Pieter Abbeel (Berkeley)

Published: March 10, 2025

ZeroHSI: Zero-Shot 4D Human-Scene Interaction by Video Generation (Score: 8.0/10)

Abstract: Human-scene interaction (HSI) generation is crucial for applications in embodied AI, virtual reality, and robotics. Yet, existing methods cannot synthesize interactions in unseen environments such as in-the-wild scenes or reconstructed scenes, as they rely on paired 3D scenes and captured human motion data for training, which are unavailable for unseen environments. We present ZeroHSI, a novel approach that enables zero-shot 4D human-scene interaction synthesis, eliminating the need for training on any MoCap data. Our key insight is to distill human-scene interactions from state-of-the-art video generation models, which have been trained on vast amounts of natural human movements and interactions, and use differentiable rendering to reconstruct human-scene interactions. ZeroHSI can synthesize realistic human motions in both static scenes and environments with dynamic objects, without requiring any ground-truth motion data. We evaluate ZeroHSI on a curated dataset of different types of various indoor and outdoor scenes with different interaction prompts, demonstrating its ability to generate diverse and contextually appropriate human-scene interactions.

Significance: Generating realistic human-scene interactions is crucial for embodied AI, VR/AR, and robotics. The paper addresses the limitation of existing methods that require paired 3D scenes and motion capture data, which are unavailable for unseen environments. By enabling zero-shot HSI synthesis, the research contributes to a more flexible and scalable approach to HSI generation. However, the reliance on video generation models means the quality of the generated interactions is limited by the quality of those models.

Authors:

- Hongjie Li (Stanford University)

- Hong-Xing Yu (Stanford University)

- Jiaman Li (Stanford University)

- Jiajun Wu (Stanford University)

PI: Jiajun Wu (Stanford University)

Published: December 24, 2024

Originality

Highlighted Publications

Papers that scored 8 or higher in this category during the review period.

ZeroHSI: Zero-Shot 4D Human-Scene Interaction by Video Generation (Score: 9.0/10)

Abstract: Human-scene interaction (HSI) generation is crucial for applications in embodied AI, virtual reality, and robotics. Yet, existing methods cannot synthesize interactions in unseen environments such as in-the-wild scenes or reconstructed scenes, as they rely on paired 3D scenes and captured human motion data for training, which are unavailable for unseen environments. We present ZeroHSI, a novel approach that enables zero-shot 4D human-scene interaction synthesis, eliminating the need for training on any MoCap data. Our key insight is to distill human-scene interactions from state-of-the-art video generation models, which have been trained on vast amounts of natural human movements and interactions, and use differentiable rendering to reconstruct human-scene interactions. ZeroHSI can synthesize realistic human motions in both static scenes and environments with dynamic objects, without requiring any ground-truth motion data. We evaluate ZeroHSI on a curated dataset of different types of various indoor and outdoor scenes with different interaction prompts, demonstrating its ability to generate diverse and contextually appropriate human-scene interactions.

Significance: The paper introduces a novel approach, ZeroHSI, for zero-shot 3D human-scene interaction synthesis. The key insight of distilling interactions from video generation models and using differentiable rendering is original. The formulation of the problem and the integration of different techniques are also novel. The approach eliminates the need for paired motion-scene training data, which is a significant contribution. However, the originality is somewhat tempered by the reliance on existing video generation models.

Authors:

- Hongjie Li (Stanford University)

- Hong-Xing Yu (Stanford University)

- Jiaman Li (Stanford University)

- Jiajun Wu (Stanford University)

PI: Jiajun Wu (Stanford University)

Published: December 24, 2024

Uncertainty Comes for Free: Human-in-the-Loop Policies with Diffusion Models (Score: 8.0/10)

Abstract: Human-in-the-loop (HitL) robot deployment has gained significant attention in both academia and industry as a semi-autonomous paradigm that enables human operators to intervene and adjust robot behaviors at deployment time, improving success rates. However, continuous human monitoring and intervention can be highly labor-intensive and impractical when deploying a large number of robots. To address this limitation, we propose a method that allows diffusion policies to actively seek human assistance only when necessary, reducing reliance on constant human oversight. To achieve this, we leverage the generative process of diffusion policies to compute an uncertainty-based metric based on which the autonomous agent can decide to request operator assistance at deployment time, without requiring any operator interaction during training. Additionally, we show that the same method can be used for efficient data collection for fine-tuning diffusion policies in order to improve their autonomous performance. Experimental results from simulated and real-world environments demonstrate that our approach enhances policy performance during deployment for a variety of scenarios.

Significance: The paper presents a novel approach to HitL robot deployment by leveraging the generative process of diffusion policies to compute an uncertainty-based metric. The idea of using the denoising process for uncertainty estimation and combining it with GMMs to capture multi-modality is original. The application of this method for both HitL deployment and policy fine-tuning further enhances its originality. A potential pitfall is the reliance on diffusion models, which might not be suitable for all robotic tasks.

Authors:

- Zhanpeng He (Columbia University)

- Yifeng Cao (Columbia University)

- Matei Ciocarlie (Columbia University)

PI: Matei Ciocarlie (Columbia University)

Published: February 26, 2025

Shape-Kit: A Design Toolkit for Crafting On-Body Expressive Haptics (Score: 8.0/10)

Abstract: Driven by the vision of everyday haptics, the HCI community is advocating for "design touch first"

and investigating "how to touch well." However, a gap remains between the exploratory nature of haptic design and

technical reproducibility. We present Shape-Kit, a hybrid design toolkit embodying our "crafting haptics" metaphor,

where hand touch is transduced into dynamic pin-based sensations that can be freely explored across the body. An ad-

hoc tracking module captures and digitizes these patterns. Our study with 14 designers and artists demonstrates how

Shape-Kit facilitates sensorial exploration for expressive haptic design. We analyze how designers collaboratively

ideate, prototype, iterate, and compose touch experiences and show the subtlety and richness of touch that can

be achieved through diverse crafting methods with Shape-Kit. Reflecting on the findings, our work contributes key

insights into haptic toolkit design and touch design practices centered on the "crafting haptics" metaphor. We discuss

in-depth how Shape-Kit's simplicity, though remaining constrained, enables focused crafting for deeper exploration,

while its collaborative nature fosters shared sense-making of touch experiences.

Significance: The Shape-Kit, with its hybrid approach of combining hand touch transduction with dynamic

pin-based sensations and an ad-hoc tracking module, appears to be a novel contribution. The 'crafting haptics'

metaphor is also a unique framing. The originality could be strengthened by a more thorough comparison to existing

shape-changing interfaces and haptic toolkits.

Authors:

- Ran Zhou (University of Chicago)

- Jianru Ding (University of Chicago)

- Chenfeng Gao (University of Chicago)

- Wanli Qian (University of Chicago)

- Benjamin Erickson (University of Chicago)

- Madeline Balaam (University of Chicago)

- Daniel Leithinger (University of Chicago)

- Ken Nakagaki (University of Chicago)

PI: Ken Nakagaki (University of Chicago)

Published: March 16, 2025

Don't Yell at Your Robot: Physical Correction as the Collaborative Interface for Language Model Powered Robots (Score: 8.0/10)

Abstract: We present a novel approach for enhancing human-robot collaboration using physical interactions for

real-time error correction of large language model (LLM) powered robots. Unlike other methods that rely on verbal or

text commands, the robot leverages an LLM to proactively executes 6 DoF linear Dynamical System (DS) commands

using a description of the scene in natural language. During motion, a human can provide physical corrections, used

to re-estimate the desired intention, also parameterized by linear DS. This corrected DS can be converted to natural

language and used as part of the prompt to improve future LLM interactions. We provide proof-of-concept result in

a hybrid real+sim experiment, showcasing physical interaction as a new possibility for LLM powered human-robot

interface.

Significance: The paper presents a novel approach by integrating physical corrections with LLM-powered robots.

The idea of using physical interaction for real-time error correction and feeding this information back to the LLM for

improved future interactions is innovative. However, the individual components (LLMs, DS, particle filters) are not

novel, but their integration in this context is.

Authors:

- Chuye Zhang (University of Pennsylvania)

- Yifei Simon Shao (University of Pennsylvania)

- Harshil Parekh (University of Pennsylvania)

- Junyao Shi (University of Pennsylvania)

- Pratik Chaudhari (University of Pennsylvania)

- Vijay Kumar (University of Pennsylvania)

- Nadia Figueroa (University of Pennsylvania)

PI: Vijay Kumar (University of Pennsylvania)

Published: December 17, 2024

Dual Control for Interactive Autonomous Merging with Model Predictive Diffusion (Score: 8.0/10)

Abstract: Interactive decision-making is essential in applications such as autonomous driving, where the agent

must infer the behavior of nearby human drivers while planning in real-time. Traditional predict-then-act frameworks

are often insufficient or inefficient because accurate inference of human behavior requires a continuous interaction

rather than isolated prediction. To address this, we propose an active learning framework in which we rigorously

derive predicted belief distributions. Additionally, we introduce a novel model-based diffusion solver tailored for

online receding horizon control problems, demonstrated through a complex, non-convex highway merging scenario.

Our approach extends previous high-fidelity dual control simulations to hardware experiments, which may be viewed

at https://youtu.be/Q-JdZuopGL4, and verifies behavior inference in human-driven traffic scenarios, moving beyond

idealized models. The results show improvements in adaptive planning under uncertainty, advancing the field of

interactive decision-making for real-world applications.

Significance: The paper introduces a novel variant of a model-based diffusion solver specifically designed for

receding horizon optimization. The integration of dual control with online Bayesian inference and the application

to real-world hardware experiments on F-Tenth cars demonstrate originality. The dynamic prior distribution in the

diffusion solver is a novel contribution. However, the individual components (dual control, diffusion models) are not

entirely new, but their combination and adaptation for this specific problem is original.

Authors:

- Jacob Knaup (Georgia Institute of Technology)

- Jovin D'sa (Georgia Institute of Technology)

- Behdad Chalaki (Georgia Institute of Technology)

- Hossein Nourkhiz Mahjoub (Georgia Institute of Technology)

- Ehsan Moradi-Pari (Georgia Institute of Technology)

- Panagiotis Tsiotras (Georgia Institute of Technology)

PI: Panagiotis Tsiotras (Georgia Institute of Technology)

Published: February 14, 2025

Theoretical Rigor

Highlighted Publications

Papers that scored 8 or higher in this category during the review period.

A Unified Framework for Robots that Influence Humans over Long-Term Interaction (Score: 8.0/10)

Abstract: Robot actions influence the decisions of nearby humans. Here influence refers to intentional change:

robots influence humans when they shift the human's behavior in a way that helps the robot complete its task.

Imagine an autonomous car trying to merge; by proactively nudging into the human's lane, the robot causes human

drivers to yield and provide space. Influence is often necessary for seamless interaction. However, if influence is

left unregulated and uncontrolled, robots will negatively impact the humans around them. Prior works have begun

to address this problem by creating a variety of control algorithms that seek to influence humans. Although these

methods are effective in the short-term, they fail to maintain influence over time as the human adapts to the robot's

behaviors. In this paper we therefore present an optimization framework that enables robots to purposely regulate

their influence over humans across both short-term and long-term interactions. Here the robot maintains its influence

by reasoning over a dynamic human model which captures how the robot's current choices will impact the human's

future behavior. Our resulting framework serves to unify current approaches: we demonstrate that state-of-the-art

methods are simplifications of our underlying formalism. Our framework also provides a principled way to generate

influential policies: in the best case the robot exactly solves our framework to find optimal, influential behavior. But

when solving this optimization problem becomes impractical, designers can introduce their own simplifications to

reach tractable approximations. We experimentally compare our unified framework to state-of-the-art baselines and

ablations, and demonstrate across simulations and user studies that this framework is able to successfully influence

humans over repeated interactions.

Significance: The paper provides a formal definition of influence in human-robot interaction and derives a dynamical system composed of the robot, human, and environment. The formulation of influence as a MOMDP is

theoretically sound and provides a basis for optimizing robot policies. The paper also includes theoretical derivations

showing how existing approaches can be seen as approximations of the unified framework. The reliance on specific

assumptions about human behavior and reward functions could be a limitation.

Authors:

- Dylan P. Losey (Carnegie Mellon)

- Shahabedin Sagheb (Carnegie Mellon)

- Sagar Parekh (Carnegie Mellon)

- Ravi Pandya (Carnegie Mellon)

- Ye-Ji Mun (Carnegie Mellon)

- Katherine Driggs-Campbell (Carnegie Mellon)

- Andrea Bajcsy (Carnegie Mellon)

PI: Andrea Bajcsy (Carnegie Mellon)

Published: March 18, 2025

Empirical Verification

Highlighted Publications

Papers that scored 8 or higher in this category during the review period.

Uncertainty Comes for Free: Human-in-the-Loop Policies with Diffusion Models (Score: 8.0/10)

Abstract: Human-in-the-loop (HitL) robot deployment has gained significant attention in both academia and

industry as a semi-autonomous paradigm that enables human operators to intervene and adjust robot behaviors at

deployment time, improving success rates. However, continuous human monitoring and intervention can be highly

labor-intensive and impractical when deploying a large number of robots. To address this limitation, we propose

a method that allows diffusion policies to actively seek human assistance only when necessary, reducing reliance

on constant human oversight. To achieve this, we leverage the generative process of diffusion policies to compute

an uncertainty-based metric based on which the autonomous agent can decide to request operator assistance at

deployment time, without requiring any operator interaction during training. Additionally, we show that the same

method can be used for efficient data collection for fine-tuning diffusion policies in order to improve their autonomous

performance. Experimental results from simulated and real-world environments demonstrate that our approach

enhances policy performance during deployment for a variety of scenarios.

Significance: The paper presents experimental results from both simulated and real-world environments, demonstrating the effectiveness of the proposed method in various deployment scenarios. The experiments cover different

types of deployment challenges, such as data distribution shifts, partial observability, and action multi-modality.

The comparison with state-of-the-art baselines provides further evidence of the method's superiority. However, the

number of real-world experiments is relatively small, and the results could be more statistically significant.

Authors:

- Zhanpeng He (Columbia University)

- Yifeng Cao (Columbia University)

- Matei Ciocarlie (Columbia University)

PI: Matei Ciocarlie (Columbia University)

Published: February 26, 2025

Dual Control for Interactive Autonomous Merging with Model Predictive Diffusion (Score: 8.0/10)

Abstract: Interactive decision-making is essential in applications such as autonomous driving, where the agent

must infer the behavior of nearby human drivers while planning in real-time. Traditional predict-then-act frameworks

are often insufficient or inefficient because accurate inference of human behavior requires a continuous interaction

rather than isolated prediction. To address this, we propose an active learning framework in which we rigorously

derive predicted belief distributions. Additionally, we introduce a novel model-based diffusion solver tailored for

online receding horizon control problems, demonstrated through a complex, non-convex highway merging scenario.

Our approach extends previous high-fidelity dual control simulations to hardware experiments, which may be viewed

at https://youtu.be/Q-JdZuopGL4, and verifies behavior inference in human-driven traffic scenarios, moving beyond

idealized models. The results show improvements in adaptive planning under uncertainty, advancing the field of

interactive decision-making for real-world applications.

Significance: The framework is validated through real-world hardware experiments on F-Tenth cars in a challenging, real-time traffic merging scenario. The comparison with baseline methods (DMPPI and EMPPI) provides

empirical evidence of the effectiveness of the proposed approach. The use of human-controlled vehicles adds to the

realism of the experiments. However, the scale of the experiments (number of trials, diversity of scenarios) could be

increased to further strengthen the empirical verification.

Authors:

- Jacob Knaup (Georgia Institute of Technology)

- Jovin D'sa (Georgia Institute of Technology)

- Behdad Chalaki (Georgia Institute of Technology)

- Hossein Nourkhiz Mahjoub (Georgia Institute of Technology)

- Ehsan Moradi-Pari (Georgia Institute of Technology)

- Panagiotis Tsiotras (Georgia Institute of Technology)

PI: Panagiotis Tsiotras (Georgia Institute of Technology)

Published: February 14, 2025

Augmenting Minds or Automating Skills: The Differential Role of Human Capital in Generative AI's Impact on Creative Tasks (Score: 8.0/10)

Abstract: Generative AI is rapidly reshaping creative work, raising critical questions about its beneficiaries and

societal implications. This study challenges prevailing assumptions by exploring how generative AI interacts with

diverse forms of human capital in creative tasks. Through two random controlled experiments in flash fiction writing

and song composition, we uncover a paradox: while AI democratizes access to creative tools, it simultaneously

amplifies cognitive inequalities. Our findings reveal that AI enhances general human capital (cognitive abilities and

education) by facilitating adaptability and idea integration but diminishes the value of domain-specific expertise.

We introduce a novel theoretical framework that merges human capital theory with the automation-augmentation

perspective, offering a nuanced understanding of human-AI collaboration. This framework elucidates how AI shifts the

locus of creative advantage from specialized expertise to broader cognitive adaptability. Contrary to the notion of AI as

a universal equalizer, our work highlights its potential to exacerbate disparities in skill valuation, reshaping workplace

hierarchies and redefining the nature of creativity in the AI era. These insights advance theories of human capital

and automation while providing actionable guidance for organizations navigating AI integration amidst workforce

inequalities.

Significance: The research employs two randomized controlled experiments to test the hypotheses. The use of

public evaluations and professional recordings enhances the ecological validity of the findings. The statistical analysis

is sound, using OLS regression and simple slope analysis. However, the reliance on self-reported measures for specific

human capital in the first experiment and potential biases in rater evaluations are limitations.

Authors:

- Meiling Huang (Virginia Tech)

- Ming Jin (Virginia Tech)

- Ning Li (Virginia Tech)

PI: Ming Jin (Virginia Tech)

Published: December 5, 2024

Generalizability

Highlighted Publications

No papers scored 8 or higher in this category during the review period.

Coding Sophistication

Highlighted Publications

Papers that scored 8 or higher in this category during the review period.

ZeroHSI: Zero-Shot 4D Human-Scene Interaction by Video Generation (Score: 8.0/10)

Abstract: Human-scene interaction (HSI) generation is crucial for applications in embodied AI, virtual reality,

and robotics. Yet, existing methods cannot synthesize interactions in unseen environments such as in-the-wild scenes

or reconstructed scenes, as they rely on paired 3D scenes and captured human motion data for training, which are

unavailable for unseen environments. We present ZeroHSI, a novel approach that enables zero-shot 4D human-scene

interaction synthesis, eliminating the need for training on any MoCap data. Our key insight is to distill human-scene

interactions from state-of-the-art video generation models, which have been trained on vast amounts of natural human

movements and interactions, and use differentiable rendering to reconstruct human-scene interactions. ZeroHSI can

synthesize realistic human motions in both static scenes and environments with dynamic objects, without requiring

any ground-truth motion data. We evaluate ZeroHSI on a curated dataset of different types of various indoor and

outdoor scenes with different interaction prompts, demonstrating its ability to generate diverse and contextually

appropriate human-scene interactions.

Significance: The implementation of ZeroHSI requires sophisticated coding skills, including expertise in differentiable rendering, optimization techniques, and video processing. The integration of different libraries and frameworks

(e.g., PyTorch, TensorFlow) also adds to the complexity. The paper mentions the use of Gaussian Avatar model and

DGS, which require significant coding effort.

Authors:

- Hongjie Li (Stanford University)

- Hong-Xing Yu (Stanford University)

- Jiaman Li (Stanford University)

- Jiajun Wu (Stanford University)

PI: Jiajun Wu (Stanford University)

Published: December 24, 2024

Dual Control for Interactive Autonomous Merging with Model Predictive Diffusion (Score: 8.0/10)

Abstract: Interactive decision-making is essential in applications such as autonomous driving, where the agent

must infer the behavior of nearby human drivers while planning in real-time. Traditional predict-then-act frameworks

are often insufficient or inefficient because accurate inference of human behavior requires a continuous interaction

rather than isolated prediction. To address this, we propose an active learning framework in which we rigorously

derive predicted belief distributions. Additionally, we introduce a novel model-based diffusion solver tailored for

online receding horizon control problems, demonstrated through a complex, non-convex highway merging scenario.

Our approach extends previous high-fidelity dual control simulations to hardware experiments, which may be viewed

at https://youtu.be/Q-JdZuopGL4, and verifies behavior inference in human-driven traffic scenarios, moving beyond

idealized models. The results show improvements in adaptive planning under uncertainty, advancing the field of

interactive decision-making for real-world applications.

Significance: The implementation of the proposed framework requires sophisticated coding skills, including expertise in optimization algorithms, Bayesian inference, diffusion models, and real-time control systems. The use of

parallel GPU-enabled processing using Jax indicates a high level of coding sophistication. The integration of these

components into a working autonomous driving system is a significant coding achievement.

Authors:

- Jacob Knaup (Georgia Institute of Technology)

- Jovin D'sa (Georgia Institute of Technology)

- Behdad Chalaki (Georgia Institute of Technology)

- Hossein Nourkhiz Mahjoub (Georgia Institute of Technology)

- Ehsan Moradi-Pari (Georgia Institute of Technology)

- Panagiotis Tsiotras (Georgia Institute of Technology)

PI: Panagiotis Tsiotras (Georgia Institute of Technology)

Published: February 14, 2025

Longevity

Highlighted Publications

Papers that scored 8 or higher in this category during the review period.

Digitizing Touch with an Artificial Multimodal Fingertip (Score: 8.0/10)

Abstract: Touch is a crucial sensing modality that provides rich information about object properties and interactions with the physical environment. Humans and robots both benefit from using touch to perceive and interact

with the surrounding environment (Johansson and Flanagan, 2009; Li et al., 2020; Calandra et al., 2017). However,

no existing systems provide rich, multi-modal digital touch-sensing capabilities through a hemispherical compliant

embodiment. Here, we describe several conceptual and technological innovations to improve the digitization of touch.

These advances are embodied in an artificial finger-shaped sensor with advanced sensing capabilities. Significantly,

this fingertip contains high-resolution sensors ( 8.3 million taxels) that respond to omnidirectional touch, capture

multi-modal signals, and use on-device artificial intelligence to process the data in real time. Evaluations show that

the artificial fingertip can resolve spatial features as small as 7 um, sense normal and shear forces with a resolution of

1.01 mN and 1.27 mN, respectively, perceive vibrations up to 10 kHz, sense heat, and even sense odor. Furthermore,

it embeds an on-device AI neural network accelerator that acts as a peripheral nervous system on a robot and mimics

the reflex arc found in humans. These results demonstrate the possibility of digitizing touch with superhuman performance. The implications are profound, and we anticipate potential applications in robotics (industrial, medical,

agricultural, and consumer-level), virtual reality and telepresence, prosthetics, and e-commerce. Toward digitizing

touch at scale, we open-source a modular platform to facilitate future research on the nature of touch.

Significance: The research is likely to have a lasting impact on the field of tactile sensing and robotics. The development of a high-performance artificial fingertip with multi-modal sensing capabilities and on-device AI processing

represents a significant advancement. The open-source nature of the platform will further contribute to its longevity

by enabling future research and development. However, the rapid pace of technological advancements in AI and

sensing technologies could eventually render some aspects of the research obsolete.

Authors:

- Mike Lambeta (Berkeley)

- Tingfan Wu (Berkeley)

- Ali Sengul (Berkeley)

- Victoria Rose Most (Berkeley)

- Nolan Black (Berkeley)

- Kevin Sawyer (Berkeley)

- Romeo Mercado (Berkeley)

- Haozhi Qi (Berkeley)

- Alexander Sohn (Berkeley)

- Byron Taylor (Berkeley)

- Norb Tydingco (Berkeley)

- Gregg Kammerer (Berkeley)

- Dave Stroud (Berkeley)

- Jake Khatha (Berkeley)

- Kurt Jenkins (Berkeley)

- Kyle Most (Berkeley)

- Neal Stein (Berkeley)

- Ricardo Chavira (Berkeley)

- Thomas Craven-Bartle (Berkeley)

- Eric Sanchez (Berkeley)

- Yitian Ding (Berkeley)

- Jitendra Malik (Berkeley)

- Roberto Calandra (Berkeley)

PI: Jitendra Malik (Berkeley)

Published: November 4, 2024

Practicality

Highlighted Publications

Papers that scored 8 or higher in this category during the review period.

RoboCopilot: Human-in-the-loop Interactive Imitation Learning for Robot Manipulation (Score: 8.0/10)

Abstract: Learning from human demonstration is an effective approach for learning complex manipulation skills.

However, existing approaches heavily focus on learning from passive human demonstration data for its simplicity

in data collection. Interactive human teaching has appealing theoretical and practical properties, but they are

not well supported by existing human-robot interfaces. This paper proposes a novel system that enables seamless

control switching between human and an autonomous policy for bi-manual manipulation tasks, enabling more efficient

learning of new tasks. This is achieved through a compliant, bilateral teleoperation system. Through simulation and

hardware experiments, we demonstrate the value of our system in an interactive human teaching for learning complex

bi-manual manipulation skills.

Significance: The RoboCopilot system has the potential to be applied in various practical settings, such as

manufacturing, logistics, and healthcare. The system could be used to train robots to perform complex manipulation

tasks that are difficult to program manually. The cost and complexity of the system might limit its widespread

adoption.

Authors:

- Philipp Wu (Berkeley)

- Yide Shentu (Berkeley)

- Qiayuan Liao (Berkeley)

- Ding Jin (Berkeley)

- Menglong Guo (Berkeley)

- Koushil Sreenath (Berkeley)

- Xingyu Lin (Berkeley)

- Pieter Abbeel (Berkeley)

PI: Pieter Abbeel (Berkeley)

Published: March 10, 2025

Towards Wearable Interfaces for Robotic Caregiving (Score: 8.0/10)

Abstract: Physically assistive robots in home environments can enhance the autonomy of individuals with impairments, allowing them to regain the ability to conduct self-care and household tasks. Individuals with physical

limitations may find existing interfaces challenging to use, highlighting the need for novel interfaces that can effectively support them. In this work, we present insights on the design and evaluation of an active control wearable

interface named HAT, Head-Worn Assistive Teleoperation. To tackle challenges in user workload while using such

interfaces, we propose and evaluate a shared control algorithm named Driver Assistance. Finally, we introduce the

concept of passive control, in which wearable interfaces detect implicit human signals to inform and guide robotic

actions during caregiving tasks, with the aim of reducing user workload while potentially preserving the feeling of

control.

Significance: The research has significant practical potential for improving the lives of individuals with impairments

and their caregivers. The wearable interfaces and shared/passive control mechanisms could enable more effective and

user-friendly assistive robotic solutions. The focus on real-world tasks and environments increases the likelihood of

practical translation. However, further development and testing are needed to address issues of robustness, reliability,

and cost.

Authors:

- Akhil Padmanabha (Carnegie Mellon)

- Carmel Majidi (Carnegie Mellon)

- Zackory Erickson (Carnegie Mellon)

PI: Zackory Erickson (Carnegie Mellon)

Published: February 7, 2025

Interruption Handling for Conversational Robots (Score: 8.0/10)

Abstract: Interruptions, a fundamental component of human communication, can enhance the dynamism and

effectiveness of conversations, but only when effectively managed by all parties involved. Despite advancements

in robotic systems, state-of-the-art systems still have limited capabilities in handling user-initiated interruptions

in real-time. Prior research has primarily focused on post hoc analysis of interruptions. To address this gap, we

present a system that detects user-initiated interruptions and manages them in real-time based on the interrupter's

intent (i.e., cooperative agreement, cooperative assistance, cooperative clarification, or disruptive interruption). The

system was designed based on interaction patterns identified from human-human interaction data. We integrated our

system into an LLM-powered social robot and validated its effectiveness through a timed decision-making task and

a contentious discussion task with 21 participants. Our system successfully handled 93.69% (n=104/111) of user-

initiated interruptions. We discuss our learnings and their implications for designing interruption-handling behaviors

in conversational robots.

Significance: The research has high practical potential. The interruption handling system can be integrated into

a variety of conversational robots to improve their interaction capabilities. The system can be used in applications

such as customer service, education, and healthcare. The ability to handle interruptions effectively can lead to more

efficient and satisfying interactions between humans and robots. The system could be used in robots designed for

companionship, assistance, or collaboration.

Authors:

- Shiye Cao (Johns Hopkins University)

- Jiwon Moon (Johns Hopkins University)

- Amama Mahmood (Johns Hopkins University)

- Victor Nikhil Antony (Johns Hopkins University)

- Ziang Xiao (Johns Hopkins University)

- Anqi Liu (Johns Hopkins University)

- Chien-Ming Huang (Johns Hopkins University)

PI: Chien-Ming Huang (Johns Hopkins University)

Published: January 2, 2025

Student Reflections on Self-Initiated GenAI Use in HCI Education (Score: 8.0/10)

Abstract: Generative Artificial Intelligence's (GenAI) impact on Human-Computer Interaction (HCI) education

and technology design is pervasive but poorly understood. This study examines how graduate students in an applied

HCI course utilized GenAI tools across various stages of interactive device design. Although the course policy neither

explicitly encouraged nor prohibited using GenAI, students independently integrated these tools into their work.

Through conducting 12 post-class group interviews, we reveal the dual nature of GenAI: while it stimulates creativity

and accelerates design iterations, it also raises concerns about shallow learning and over-reliance. Our findings indicate

that GenAI's benefits are most pronounced in the Execution phase of the design process, particularly for rapid

prototyping and ideation. In contrast, its use in the Discover phase and design reflection may compromise depth.

This study underscores the complex role of GenAI in HCI education and offers recommendations for curriculum

improvements to better prepare future designers for effectively integrating GenAI into their creative processes.

Significance: The research has practical implications for HCI educators and curriculum designers. The recommendations for curriculum improvements and the identification of potential pitfalls are directly applicable to educational

settings. The study provides valuable guidance for integrating GenAI tools into HCI education in a responsible and

effective manner.

Authors:

- Hauke Sandhaus (Cornell University)

- Quiquan Gu (Cornell University)

- Maria Teresa Parreira (Cornell University)

- Wendy Ju (Cornell University)

PI: Wendy Ju (Cornell University)

Published: October 17, 2024

Defense Relevance

Highlighted Publications

No papers scored 8 or higher in this category during the review period.

Healthcare Relevance

Highlighted Publications

Papers that scored 8 or higher in this category during the review period.

Humanoids in Hospitals: A Technical Study of Humanoid Surrogates for Dexterous Medical Interventions (Score: 9.0/10)

Abstract: The increasing demand for healthcare workers, driven by aging populations and labor shortages, presents

a significant challenge for hospitals. Humanoid robots have the potential to alleviate these pressures by leveraging

their human-like dexterity and adaptability to assist in medical procedures. This work conducted an exploratory study

on the feasibility of humanoid robots performing direct clinical tasks through teleoperation. A bimanual teleoperation

system was developed for the Unitree G1 Humanoid Robot, integrating high-fidelity pose tracking, custom grasping

configurations, and an impedance controller to safely and precisely manipulate medical tools. The system is evaluated

in seven diverse medical procedures, including physical examinations, emergency interventions, and precision needle

tasks. Our results demonstrate that humanoid robots can successfully replicate critical aspects of human medical

assessments and interventions, with promising quantitative performance in ventilation and ultrasound-guided tasks.

However, challenges remain, including limitations in force output for procedures requiring high strength and sensor

sensitivity issues affecting clinical accuracy. This study highlights the potential and current limitations of humanoid

robots in hospital settings and lays the groundwork for future research on robotic healthcare integration.

Significance: The research has significant healthcare applications. The system could potentially assist with physical

examinations, emergency interventions, and precision needle tasks. The potential benefits include reducing workload

for healthcare workers, improving patient outcomes, and increasing access to medical care in remote areas. However,

significant improvements in robot capabilities and safety are needed before the system can be widely adopted.

Authors:

- Soofiyan Atar (University of California, San Diego)

- Xiao Liang (University of California, San Diego)

- Calvin Joyce (University of California, San Diego)

- Florian Richter (University of California, San Diego)

- Wood Ricardo (University of California, San Diego)

- Charles Goldberg (University of California, San Diego)

- Preetham Suresh (University of California, San Diego)

- Michael Yip (University of California, San Diego)

PI: Michael Yip (University of California, San Diego)

Published: March 17, 2025

Towards Wearable Interfaces for Robotic Caregiving (Score: 9.0/10)

Abstract: Physically assistive robots in home environments can enhance the autonomy of individuals with impairments, allowing them to regain the ability to conduct self-care and household tasks. Individuals with physical

limitations may find existing interfaces challenging to use, highlighting the need for novel interfaces that can effectively support them. In this work, we present insights on the design and evaluation of an active control wearable

interface named HAT, Head-Worn Assistive Teleoperation. To tackle challenges in user workload while using such

interfaces, we propose and evaluate a shared control algorithm named Driver Assistance. Finally, we introduce the

concept of passive control, in which wearable interfaces detect implicit human signals to inform and guide robotic

actions during caregiving tasks, with the aim of reducing user workload while potentially preserving the feeling of

control.

Significance: The research has profound and impactful healthcare applications for improving the quality of life for

individuals with disabilities, elderly individuals, and those recovering from injuries or illnesses. The assistive robotic

solutions could enable individuals to regain independence, perform daily tasks, and reduce the burden on caregivers.

The focus on robot-assisted feeding and dressing highlights the potential for addressing specific healthcare needs.

Authors:

- Akhil Padmanabha (Carnegie Mellon)

- Carmel Majidi (Carnegie Mellon)

- Zackory Erickson (Carnegie Mellon)

PI: Zackory Erickson (Carnegie Mellon)

Published: February 7, 2025

User-Centered Design of Socially Assistive Robotic Combined with Non-Immersive Virtual Reality-based Dyadic Activities for Older Adults Residing in Long Term Care Facilities (Score: 9.0/10)

Abstract: Apathy impairs the quality of life for older adults and their care providers. While few pharmacological

remedies exist, current non-pharmacologic approaches are resource intensive. To address these concerns, this study

utilizes a user-centered design (UCD) process to develop and test a set of dyadic activities that provide physical,

cognitive, and social stimuli to older adults residing in long-term care (LTC) communities. Within the design, a

novel framework that combines socially assistive robots and non-immersive virtual reality (SAR-VR) emphasizing

human-robot interaction (HRI) and human-computer interaction (HCI) is utilized with the roles of the robots being

coach and entertainer. An interdisciplinary team of engineers, nurses, and physicians collaborated with an advisory

panel comprising LTC activity coordinators, staff, and residents to prototype the activities. The study resulted

in four virtual activities: three with the humanoid robot, Nao, and one with the animal robot, Aibo. Fourteen

participants tested the acceptability of the different components of the system and provided feedback at different

stages of development. Participant approval increased significantly over successive iterations of the system highlighting

the importance of stakeholder feedback. Five LTC staff members successfully set up the system with minimal help

from the researchers, demonstrating the usability of the system for caregivers. Rationale for activity selection, design

changes, and both quantitative and qualitative results on the acceptability and usability of the system have been

presented. The paper discusses the challenges encountered in developing activities for older adults in LTCs and

underscores the necessity of the UCD process to address them.

Significance: The research has significant healthcare applications. The SAR-VR system could be used to address

apathy, improve cognitive function, and enhance social engagement in older adults with dementia or other neurodegenerative diseases. The system could also be used in rehabilitation programs for patients with stroke, traumatic

brain injury, or other conditions that affect cognitive or motor function. The healthcare relevance score is high due

to the direct impact on improving the well-being of older adults.

Authors:

- Ritam Ghosh (Vanderbilt University)

- Nibraas Khan (Vanderbilt University)

- Miroslava Migovich (Vanderbilt University)

- Judith A. Tate (Vanderbilt University)

- Cathy Maxwell (Vanderbilt University)

- Emily Latshaw (Vanderbilt University)

- Paul Newhouse (Vanderbilt University)

- Douglas W. Scharre (Vanderbilt University)

- Alai Tan (Vanderbilt University)

- Kelley Colopietro (Vanderbilt University)

- Lorraine C. Mion (Vanderbilt University)

- Nilanjan Sarkar (Vanderbilt University)

PI: Nilanjan Sarkar (Vanderbilt University)

Published: October 28, 2024

EEG-Based Analysis of Brain Responses in Multi-Modal Human-Robot Interaction: Modulating Engagement (Score: 8.0/10)

Abstract: User engagement, cognitive participation, and motivation during task execution in physical human-robot

interaction are crucial for motor learning. These factors are especially important in contexts like robotic rehabilitation,

where neuroplasticity is targeted. However, traditional robotic rehabilitation systems often face challenges in maintaining user engagement, leading to unpredictable therapeutic outcomes. To address this issue, various techniques,

such as assist-as-needed controllers, have been developed to prevent user slacking and encourage active participation.

In this paper, we introduce a new direction through a novel multi-modal robotic interaction designed to enhance

user engagement by synergistically integrating visual, motor, cognitive, and auditory (speech recognition) tasks into

a single, comprehensive activity. To evaluate engagement quantitatively, we compared multiple electroencephalography

(EEG) biomarkers between this multi-modal protocol and a traditional motor-only protocol. Fifteen healthy adult

participants completed 100 trials of each task type. Our findings revealed that EEG biomarkers, particularly relative

alpha power, showed statistically significant improvements in engagement during the multi-modal task compared

to the motor-only task. Moreover, while engagement decreased over time in the motor-only task, the multi-modal

protocol maintained consistent engagement, suggesting that users could remain engaged for longer therapy sessions.

Our observations on neural responses during interaction indicate that the proposed multi-modal approach can effectively enhance user engagement, which is critical for improving outcomes. This is the first time that objective neural

response highlights the benefit of a comprehensive robotic intervention combining motor, cognitive, and auditory

functions in healthy subjects.

Significance: The research has significant implications for healthcare, particularly in the field of robotic rehabilitation. The findings suggest that multi-modal interventions can improve patient engagement and potentially enhance

neuroplasticity, leading to better motor recovery. The use of EEG to monitor engagement could personalize therapy

protocols and optimize treatment outcomes.

Authors:

- Suzanne Oliver (New York University)

- Tomoko Kitago (New York University)

- Adam Buchwald (New York University)

- S. Farokh Atashzar (New York University)

PI: S. Farokh Atashzar (New York University)

Published: November 27, 2024

Inclusion in Assistive Haircare Robotics: Practical and Ethical Considerations in Hair Manipulation (Score: 8.0/10)

Abstract: Robot haircare systems could provide a controlled and personalized environment that is respectful of

an individual's sensitivities and may offer a comfortable experience. We argue that because of hair and hairstyles'

often unique importance in defining and expressing an individual's identity, we should approach the development of

assistive robot haircare systems carefully while considering various practical and ethical concerns and risks. In this

work, we specifically list and discuss the consideration of hair type, expression of the individual's preferred identity,

cost accessibility of the system, culturally-aware robot strategies, and the associated societal risks. Finally, we discuss

the planned studies that will allow us to better understand and address the concerns and considerations we outlined

in this work through interactions with both haircare experts and end-users. Through these practical and ethical

considerations, this work seeks to systematically organize and provide guidance for the development of inclusive and

ethical robot haircare systems.

Significance: The paper has significant implications for healthcare, particularly in the context of elderly care,

disability support, and mental health. Assistive haircare robots could improve the quality of life for individuals who

have difficulty performing these tasks independently. The focus on personalization and user preferences could also

enhance the therapeutic benefits of haircare. The potential to reduce sensory overload for neurodivergent individuals

is also a significant healthcare application.

Authors:

- Uksang Yoo (Carnegie Mellon)

- Nathaniel Dennler (Carnegie Mellon)

- Sarvesh Patil (Carnegie Mellon)

- Jean Oh (Carnegie Mellon)

- Jeffrey Ichnowski (Carnegie Mellon)

PI: Jeffrey Ichnowski (Carnegie Mellon)

Published: November 7, 2024

Technology Readiness Level

Highlighted Publications

No papers achieved a Technology Readiness Level (TRL) of 6 or higher during the review period.

Industry Integration

(Prepared for Toyota Research Institute)

| Paper |

Capability |

Potential Integration |

| Digitizing Touch with an Artificial Multimodal Fingertip |

Provides robots with superhuman tactile sensing (high-res pressure, shear, vibration, heat, odor) with on-device processing. |

Integrate sensor into TRI's humanoid or assistive robot grippers to enable delicate manipulation, object identification, and safer physical interaction in home environments or potentially for advanced driver assistance systems (e.g., sensing grip on steering wheel). |

| Towards Wearable Interfaces for Robotic Caregiving |

Enables intuitive, low-workload control of assistive robots via head-worn interfaces and shared/passive control algorithms. |

Develop assistive robots for TRI's aging-in-place initiatives controlled via these interfaces, allowing users with limited mobility to operate robots for daily tasks (feeding, dressing). |

| RoboCopilot: Human-in-the-loop Interactive Imitation Learning for Robot Manipulation |

Provides an efficient method (interactive learning via seamless teleoperation switching) to teach robots complex manipulation skills. |

Use RoboCopilot framework to accelerate the training of TRI's robots for complex household or assembly tasks, reducing programming time and leveraging human intuition. |